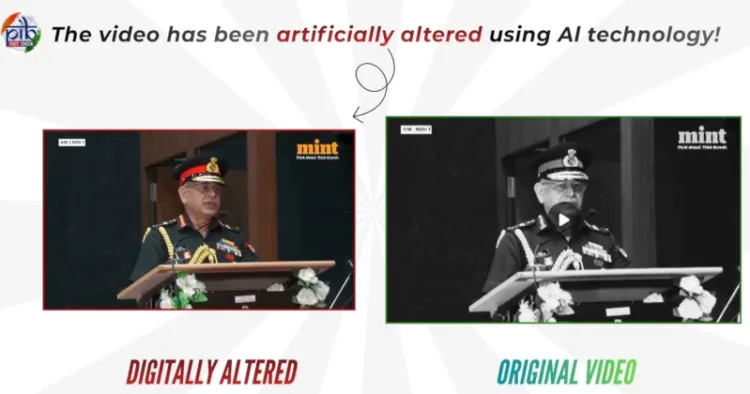

Pakistan-based propaganda accounts have unleashed a malicious AI-generated deepfake video featuring Chief of the Army Staff General Upendra Dwivedi, falsely portraying him as criticising India’s prestigious #Trishul military exercises and linking them to the upcoming Bihar elections. The fabricated video an insidious blend of synthetic audio, visual manipulation, and political distortion represents the latest front in the information warfare campaign against Bharat, where enemies exploit technology to attack trust in the armed forces rather than engage them on the battlefield.

The video, which went viral across social media platforms, allegedly shows General Dwivedi addressing students in his hometown Rewa, Madhya Pradesh, making controversial statements about the “politicisation” and “saffronisation” of the Indian Army. However, an official investigation and a fact-check by the Press Information Bureau (PIB) have completely debunked the clip, exposing it as an AI-generated fake designed to mislead citizens and discredit the military’s leadership.

“❌ Chief of the Army Staff, General Upendra Dwivedi, has NOT made any such statement!

⚠️ This #AI-generated #fake video is being circulated to mislead people and create distrust against the #IndianArmedForces.”

— PIB Fact Check, on X

🚨 #AI Video Alert 🚨

Pakistani propaganda accounts are circulating a digitally altered video of Chief of the Army Staff, General Upendra Dwivedi, making claims that the #Trishul exercises are nothing but political theatre before the #Biharelections#PIBFactCheck

❌Chief of… pic.twitter.com/lkbJOji4Bn

— PIB Fact Check (@PIBFactCheck) November 3, 2025

Watch the unedited video:

The PIB also released the unedited version of General Dwivedi’s actual speech, available on YouTube, in which the Army Chief spoke about the challenges of future warfare, modernisation of defence forces, and the need for technological readiness without a single reference to politics or elections.

A detailed analysis by the Digital Forensics, Research and Analytics Centre (DFRAC) further confirmed the synthetic manipulation. The DFRAC team performed a frame-by-frame comparison and identified mismatched facial movements, unnatural voice modulations, and AI-synthesised audio signatures consistent with deepfake technology.

DFRAC researchers traced the origin of the video to Pakistan-based social media handles, many of which are linked to coordinated propaganda networks known for amplifying anti-India narratives. “We found no instance of General Dwivedi making political comments in his Rewa address. The fake video was generated using advanced AI voice cloning tools to match his speech pattern and inserted with false subtitles to enhance believability,” the DFRAC report revealed.

The original video of General Dwivedi’s interaction covered by The Mint and The Indian Express shows him addressing students on the Army’s vision for the future and the critical role of youth in nation-building. The viral deepfake twisted this benign address into a politically charged attack on the government, seeking to manufacture friction between the Army and civilian institutions.

This incident is not an isolated case it is part of a pattern of hybrid warfare orchestrated by Pakistan’s Inter-Services Public Relations (ISPR) and its online echo chambers. For years, Islamabad’s digital propaganda cells have engaged in information manipulation, ranging from fake news on Kashmir to false claims about the Indian military’s operations. The deepfake of General Dwivedi marks a dangerous escalation the use of AI-enabled psychological warfare to erode trust in the Army’s apolitical ethos.

By targeting the #Trishul exercises, which involve India’s Northern, Western, and Central Commands in coordinated high-altitude and desert warfare simulations, the propaganda campaign aims to delegitimise one of India’s most ambitious joint defence drills. The timing ahead of the Bihar elections reflects a deliberate attempt to politicise military professionalism and portray the Army as being influenced by electoral agendas, an old trope frequently employed by adversarial media outlets.

Experts warn that this is part of a larger AI-propaganda ecosystem thriving in Pakistan, China, and their extended digital networks. These ecosystems exploit deepfake generation tools, synthetic voice models, and disinformation algorithms to spread convincing forgeries within hours. Once such content goes viral, retractions or fact-checks rarely match its speed or reach.

“Deepfakes have become the new weapon of psychological warfare. They target credibility, not combat. When you cannot defeat a nation militarily, you attempt to fracture its social trust,” says cyber intelligence expert Col (Retd.) Anil Bhardwaj.

The manipulation of General Dwivedi’s video mirrors similar campaigns in the past. From fake videos of Indian soldiers in Manipur to AI-generated clips of Prime Minister Narendra Modi, India has been facing a barrage of digital warfare designed to provoke outrage, erode unity, and manipulate narratives.

Such propaganda attempts are often synchronised with hashtags, bot amplification, and coordinated posting patterns observed across X, Telegram, and Facebook groups originating from Pakistan.

Despite these malicious campaigns, the Indian Army’s credibility remains unshaken. General Dwivedi, known for his focus on technological adaptation and national integration, has emphasised modern warfare preparedness, cyber defence, and indigenous innovation priorities that stand in stark contrast to the divisive rhetoric projected in the fake video.

The PIB has urged citizens to verify before sharing, reminding the public that the consequences of misinformation can be severe. Even a single viral fake can cause deep reputational damage, especially when amplified by hostile networks. “Stay alert. Do not forward content of this type. For authentic information, rely only on official sources,” PIB warned.

Comments