Suchir Balaji, a 26-year-old Indian-American former OpenAI researcher and whistleblower, was found dead in his apartment last month. The San Francisco Police have classified his death as a suicide.

The officials found him dead in his Buchanan Street flat on November 26, the San Francisco Police said. The police arrived at his apartment after his friends expressed concerns over his well-being and found him dead.

“Officers and medics arrived on the scene and located a deceased adult male from what appeared to be a suicide. No evidence of foul play was found during the initial investigation,” the police stated.

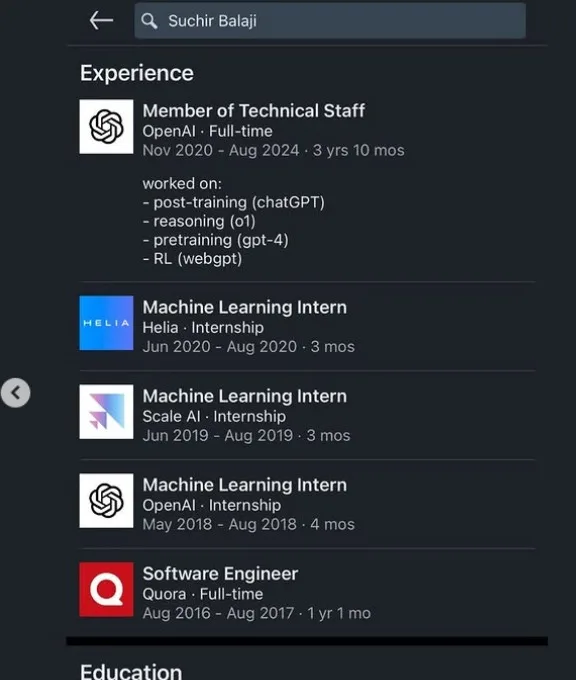

Balaji was a computer science graduate from the University of California, Berkeley, and had an impressive career trajectory that included internships at OpenAI and Scale AI during his college years.

Notably, the medical examiners have not yet revealed the cause of Balaji’s death.

In 2019, he officially joined OpenAI, where he worked for nearly four years on groundbreaking projects, including the development of GPT-4 and enhancing ChatGPT’s functionality.

His role and knowledge were considered crucial in legal proceedings against OpenAI. Balaji had publicly claimed, three months before his death, that OpenAI had breached copyright legislation in the development of ChatGPT.

Balaji, who was one of the key developers behind OpenAI’s revolutionary chatbot ChatGPT, left the company in August 2024, citing ethical concerns over the use of copyrighted data in training AI models. A day before his death, a court filing named him in a copyright lawsuit against OpenAI. The company later agreed to review Balaji’s custodial files amid growing scrutiny.

Balaji’s concerns centered on the methods used by AI systems like GPT-4, which he helped develop. He warned that such systems, trained on vast amounts of copyrighted data without explicit consent, posed risks to content creators and internet platforms. He argued that the outputs of these AI models are neither entirely novel nor exact replicas, creating legal and ethical gray areas.

“These tools can produce content that competes with the original creators, undermining their livelihoods,” Balaji said in a recent interview. He also flagged risks like AI hallucinations, where systems generate false or misleading information, reshaping the internet in harmful ways.

In his last post on X, dated October 24, he discussed his skepticism about “fair use” being a plausible legal defense for generative AI products.

https://twitter.com/suchirbalaji/status/1849192575758139733

“I initially didn’t know much about copyright or fair use,” Balaji wrote. “But after seeing the lawsuits against generative AI companies, I came to the conclusion that fair use seems like a pretty implausible defense for many AI products, especially as they create substitutes that compete with the data they’re trained on.”

However, OpenAI has denied the allegations, stating that their data use falls under fair use and is essential for innovation and competitiveness.

Comments