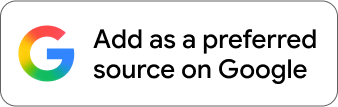

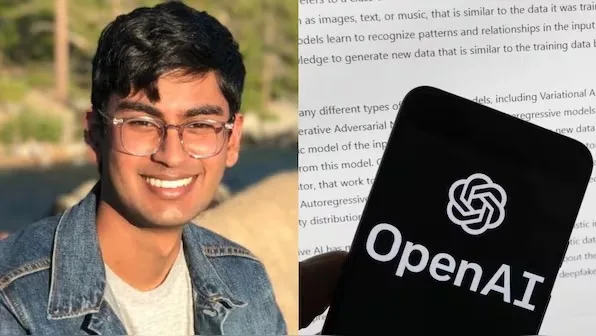

San Francisco authorities have reported the tragic death of Suchir Balaji, a 26-year-old former OpenAI researcher and whistleblower, who was found in his apartment on November 26, 2024. Police have classified his death as a suicide. Balaji, a talented computer science graduate from the University of California, Berkeley, had a remarkable career trajectory that included internships at OpenAI and Scale AI before officially joining OpenAI in 2019.

During his nearly four-year tenure at OpenAI, Balaji contributed to several groundbreaking projects, including the development of GPT-4 and enhancements to ChatGPT’s functionality. His contributions placed him among the prominent young voices in AI research. However, his promising career was abruptly cut short, leaving the tech community mourning the loss of one of its brightest minds.

Balaji resigned from OpenAI in August 2024, citing growing unease over the company’s practices. In an interview with The New York Times, he explained his decision:

“If you believe what I believe, you have to just leave the company.”

This statement reflected his increasing dissatisfaction with the ethical and legal implications of OpenAI’s methods, particularly its alleged reliance on copyrighted material for training AI models. Balaji became a vocal critic of such practices, warning of their potential to harm creators and disrupt the internet ecosystem.

In a widely circulated post on X (formerly Twitter) in October 2024, Balaji raised concerns about the misuse of copyrighted material in generative AI products:

“Fair use seems like a pretty implausible defence for a lot of generative AI products, for the basic reason that they can create substitutes that compete with the data they’re trained on.”

Balaji’s criticisms gained traction within the tech community, drawing attention to the ethical challenges surrounding AI development. In a blog post cited by the Chicago Tribune, he elaborated on his concerns:

“While generative models typically do not replicate their training data verbatim, the process of utilising copyrighted material for training might still amount to infringement.”

Balaji also called for more transparent and sustainable strategies in AI development, emphasising the need to protect creators and uphold ethical standards. His outspoken stance placed him at odds with OpenAI, which defended its practices as compliant with fair use principles.

A spokesperson for the company stated:

“We build our AI models using publicly available data, in a manner protected by fair use and related principles, and supported by longstanding and widely accepted legal precedents.”

The legal scrutiny intensified a day before Balaji’s death when he was named in a lawsuit involving OpenAI. As part of the legal proceedings, the company was required to review files linked to him. The pressure from this development may have compounded the immense stress he was under.

OpenAI expressed condolences following Balaji’s passing. A spokesperson stated:

“We are devastated to learn of this incredibly sad news today, and our hearts go out to Suchir’s loved ones during this difficult time.”

Balaji’s untimely death has sent shockwaves through the AI research community. His contributions to AI innovation and his courage in raising ethical concerns have left an indelible mark on the industry.

Balaji’s criticisms highlight the broader ethical debate surrounding AI development. The reliance on copyrighted material for training generative AI models poses unresolved questions about the balance between innovation and intellectual property rights. While companies like OpenAI argue that their practices adhere to fair use, critics like Balaji warn of potential harm to creators and the legal risks involved.

His death serves as a sombre reminder of the personal toll that such high-stakes ethical battles can take. As the tech industry continues to grapple with these challenges, Balaji’s voice will be remembered as one that seeks accountability and transparency in the rapidly evolving field of artificial intelligence.

Comments